To maintain a constant connection between an ESXi host and its storage, ESXi supports multipathing. With multipathing you can use more than one physical path for transferring data between the ESXi host and the external storage device.

In case of failure of an element in the SAN network, such as an HBA, switch, or cable, the ESXi host can fail over to another physical path. On some devices, multipathing also offers load balancing, which redistributes I/O loads between multiple paths to reduce or eliminate potential bottlenecks.

The storage architecture in vSphere 4.0 and later supports a special VMkernel layer, Pluggable Storage Architecture (PSA). The PSA is an open modular framework that coordinates the simultaneous operation of multiple multipathing plugins (MPPs). You can manage PSA using ESXCLI commands. See Managing Third-Party Storage Arrays. This section assumes you are using only PSA plugins included in vSphere by default.

In a simple multipathing local storage topology, you can use one ESXi host with two HBAs. The ESXi host connects to a dual-port local storage system through two cables. This configuration ensures fault tolerance if one of the connection elements between the ESXi host and the local storage system fails.

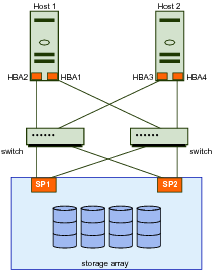

To support path switching with FC SAN, the ESXi host typically has two HBAs available from which the storage array can be reached through one or more switches. Alternatively, the setup can include one HBA and two storage processors so that the HBA can use a different path to reach the disk array.

In FC Multipathing, multiple paths connect each host with the storage device. For example, if HBA1 or the link between HBA1 and the switch fails, HBA2 takes over and provides the connection between the server and the switch. The process of one HBA taking over for another is called HBA failover.

If SP1 or the link between SP1 and the switch breaks, SP2 takes over and provides the connection between the switch and the storage device. This process is called SP failover. ESXi multipathing supports HBA and SP failover.

After you have set up your hardware to support multipathing, you can use the vSphere Web Client or vCLI commands to list and manage paths. You can perform the following tasks.

|

■

|

|

■

|

|

Important Use ESXCLI for ESXi 5.0. Use vicfg-mpath for ESX/ESXi 4.0 or later. Use vicfg-mpath35 for ESX/ESXi 3.5.

|

|

■

|

|

■

|

You can list path information with ESXCLI or with vicfg-mpath.

You can run esxcli storage core path to display information about Fibre Channel or iSCSI LUNs.

|

Important Use industry-standard device names, with format eui.xxx or naa.xxx to ensure consistency. Do not use VML LUN names unless device names are not available.

|

You can display information about paths by running esxcli storage core path. Specify one of the options listed in Connection Options in place of <conn_options>.

You can run vicfg-mpath to list information about Fibre Channel or iSCSI LUNs.

|

Important Use industry-standard device names, with format eui.xxx or naa.xxx to ensure consistency. Do not use VML LUN names unless device names are not available.

|

You can display information about paths by running vicfg-mpath with one of the following options. Specify one of the options listed in Connection Options in place of <conn_options>.

|

■

|

List paths and detailed information by specifying the path UID (long path). The path UID is the first item in the vicfg-mpath --list display.

|

The return information includes the runtime name, device, device display name, adapter, adapter identifier, target identifier, plugin, state, transport, and adapter and target transport details.

You can temporarily disable paths for maintenance or other reasons, and enable the path when you need it again. You can disable paths with ESXCLI. Specify one of the options listed in Connection Options in place of <conn_options>.

If you are changing a path’s state, the change operation fails if I/O is active when the path setting is changed. Reissue the command. You must issue at least one I/O operation before the change takes effect.

You can disable paths with vicfg-mpath. Specify one of the options listed in Connection Options in place of <conn_options>.

If you are changing a path’s state, the change operation fails if I/O is active when the path setting is changed. Reissue the command. You must issue at least one I/O operation before the change takes effect.